Software & Datasets

BASEPROD: The Bardenas Semi-Desert Planetary Rover Dataset

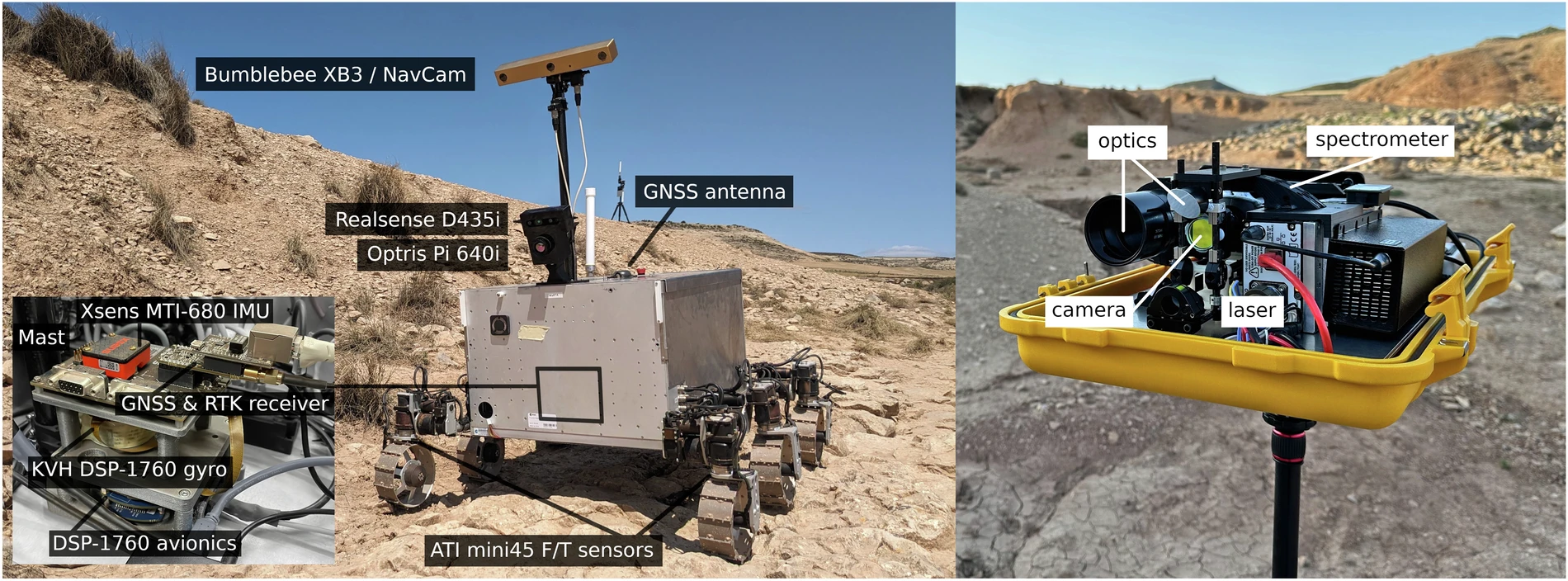

Dataset acquisitions devised specifically for robotic planetary exploration are key for the advancement, evaluation, and validation of novel perception, localization, and navigation methods in representative environments. Originating in the Bardenas semi-desert in July 2023, the data presented in this Data Descriptor is primarily aimed at Martian exploration and contains relevant rover sensor data from approximately 1.7km of traverses, a high-resolution 3D map of the test area, laser-induced breakdown spectroscopy recordings of rock samples along the rover path, as well as local weather data. In addition to optical cameras and inertial sensors, the rover features a thermal camera and six force-torque sensors. This setup enables, for example, the study of future localization, mapping, and navigation techniques in unstructured terrains for improved Guidance, Navigation, and Control (GNC). The main features of this dataset are the combination of scientific and engineering instrument data, as well as the inclusion of the thermal camera and force-torque sensors in particular.

Reference: Levin Gerdes, Tim Wiese, Raúl Castilla Arquillo, Laura Bielenberg, Martin Azkarate, Hugo Leblond, Felix Wilting, Joaquín Ortega Cortés, Alberto Bernal, Santiago Palanco & Carlos Pérez del Pulgar, BASEPROD: The Bardenas Semi-Desert Planetary Rover Dataset, Scientific Data, 11, 1054 (2024)

Hardware-Accelerated Mars Sample Localization Via Deep Transfer Learning From Photorealistic Simulations

The goal of the Mars Sample Return campaign is to collect soil samples from the surface of Mars and return them to Earth for further study. The samples will be acquired and stored in metal tubes by the Perseverance rover and deposited on the Martian surface. As part of this campaign, it is expected that the Sample Fetch Rover will be in charge of localizing and gathering up to 35 sample tubes over 150 Martian sols. Autonomous capabilities are critical for the success of the overall campaign and for the Sample Fetch Rover in particular. This work proposes a novel system architecture for the autonomous detection and pose estimation of the sample tubes. For the detection stage, a Deep Neural Network and transfer learning from a synthetic dataset are proposed. The dataset is created from photorealistic 3D simulations of Martian scenarios. Additionally, the sample tubes poses are estimated using Computer Vision techniques such as contour detection and line fitting on the detected area. Finally, laboratory tests of the Sample Localization procedure are performed using the ExoMars Testing Rover on a Mars-like testbed. These tests validate the proposed approach in different hardware architectures, providing promising results related to the sample detection and pose estimation.

Reference: Castilla-Arquillo, R., Pérez-del-Pulgar, C. J., Paz-Delgado, G. J., and Gerdes, L. Hardware-accelerated mars sample localizaiton via deep transfer learning from photorealistic simulations, IEEE Robotics & Automation Letters, 7 (4), 12555-12561 (2022)