Student Projects

If you are an undegraduate or graduate student from UMA interested in space robotics, below you will find the latest available projects in our lab.

If you would like to do a project with us but couldn’t find a suitable project, all projects are already assigned, or you have a project idea you’d like to pursue in our lab, please email us explaining your proposed idea.

Please note that some projects are exclusively available for  , while others are open for

, while others are open for  or both. Some of our projects are often conducted in collaboration with one of our multiple partners in the frame of national and international research projects. Our partners often include the European Space Agency, NASA Ames Research Center, DFKI, University of Luxembourg, Delft University of Technology, Tohoku University, EPFL, and many more.

or both. Some of our projects are often conducted in collaboration with one of our multiple partners in the frame of national and international research projects. Our partners often include the European Space Agency, NASA Ames Research Center, DFKI, University of Luxembourg, Delft University of Technology, Tohoku University, EPFL, and many more.

How to apply

Please, send an email to srl@uma.es or directly to the person in charge of each project with the following: 1) your CV, 2) your bachelor’s or master’s transcripts (you can find it via UMA’s website), 3) title of the project you are applying for, and (optional) 4) a brief description of any relevant experience related to the project’s scope.

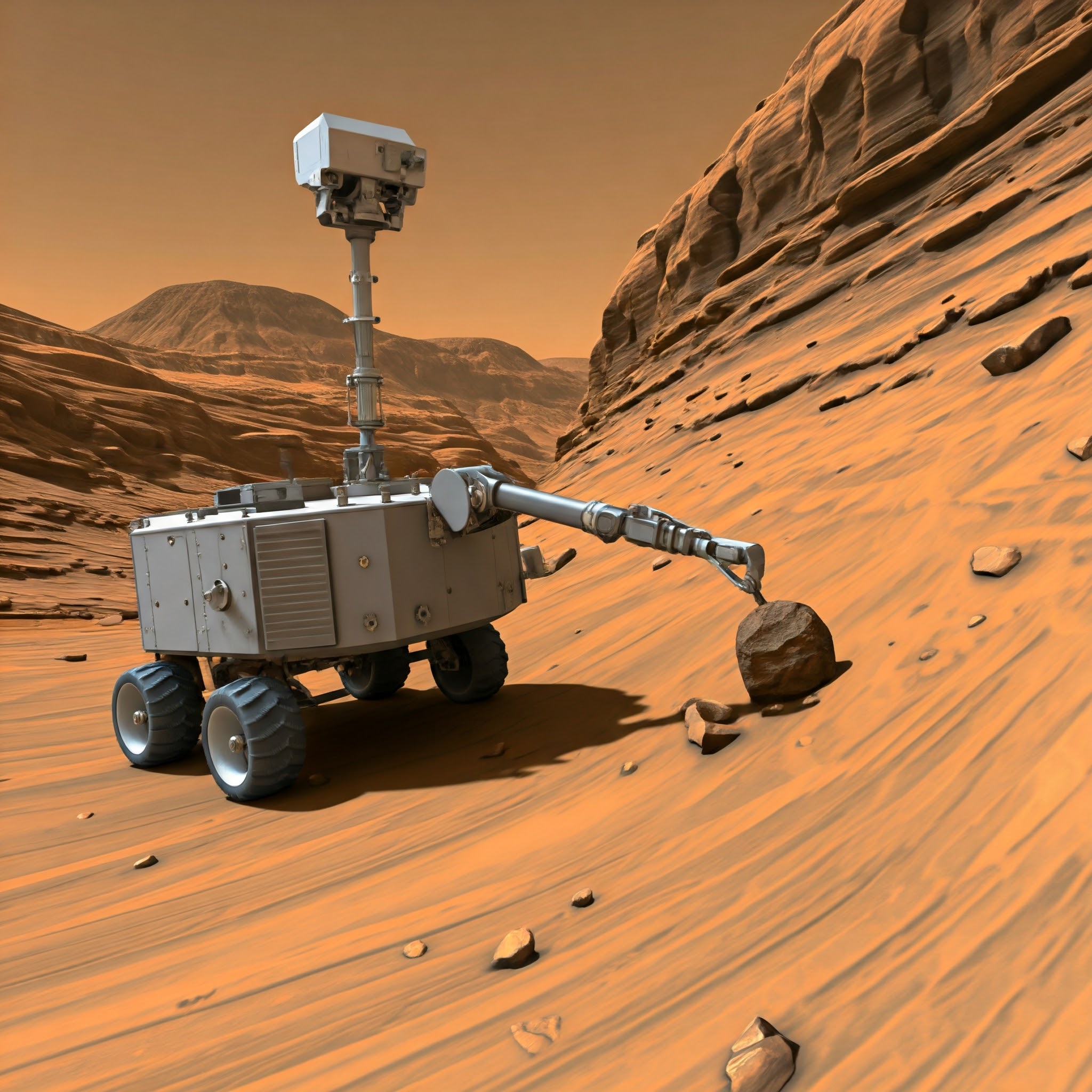

Projects

#1 Light-invariant Pose Estimation for Relative Localization in Dark, GPS-denied Environments / Localización Relativa en Entornos Oscuros y sin GPS - Available

| In this project, the goal is to develop an improved feature descriptor and matching technique for vision-based robot localization when operating across dark or perceptually degraded environments without access to a global positioning system or an active sensing device. To improve the reliability of location estimates, several studies have focused on redefining feature descriptors like SIFT, SURF, or FAST to make them less sensitive to illumination changes. You will start by evaluating the suitability of existing feature identification and matching techniques often used in robot localization. The use cases for this approach will be the autonomous exploration of lunar permanently shadowed regions (PSRs) and underground lava caves based on the use of optical cameras. Keywords: #navigation, #vision, #darkness, #planetary-exploration, #lunar |

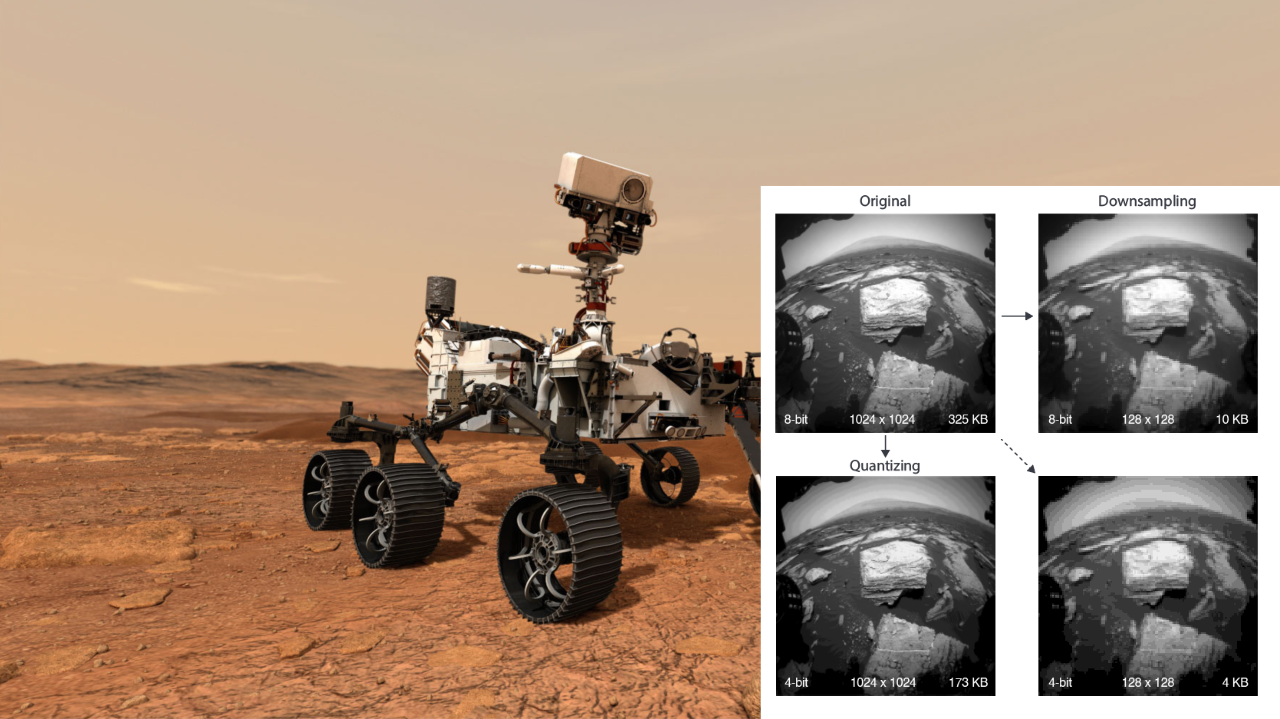

#2 Low-dimensional State Estimation for Efficient Autonomous Navigation / Estimación del Estado de un Robot Mediante Imágenes de Dimensionalidad Baja para una Navegación Autónoma Eficiente - Available

| Nature offers many examples of skillful navigators whose vision systems are not particularly characterized for providing the highest-quality signals, trading sharpness for a deeper focus, a wider field of view, or increased light sensitivity. In this project, you will study the feasibility of using quantized or native low-dimensional visual data (<8-bit images) for autonomously localizing robots in extreme planetary environments. Limited intensity values could lead to the lack of distinctive features and increased noise and ambiguity in matching. Developing feature descriptors specifically optimized for lower bit-depth images could pave the way for new research avenues, particularly in the application of single-photon cameras in robotics. The goal is to develop and test a vision-based state estimation algorithm tailored for low-dimensional data. Keywords: #navigation, #localization, #image-processing, #vision, #planetary-exploration |

#3 Fast Vision-based Autonomous Navigation Across Extreme, Off-road Environments / Navegación Autónoma Rápida a Través de Entornos Extremos Basada en Visión Artificial - Available

| A number of upcoming planetary exploration missions demand speeds orders of magnitude greater than current operational rover speeds. In this project, you will evaluate, design, implement, and test a navigation pipeline aimed at estimating the position and orientation of a robot when traversing an unstructured environment at a speed of 1 m/s or faster across different times of the day (daytime vs nighttime). Performance will be compared against state-of-the-art solutions for off-road autonomous navigation and evaluated for potential implementation in space-grade processing units. An evaluation of the accuracy and precision of the pose estimates will be conducted. The goal is to benchmark this navigation pipeline as the foundation for future developments. Both conventional and learning-based approaches could be considered. Keywords: #navigation, #off-road, #speed, #planetary-exploration, #computer-vision |

#4 Efficient Machine Learning for Single-Photon Vision / Aprendizaje Automático Eficiente para Visión de Fotón Único - Available

| Single-Photon Avalanche Diode (SPAD) cameras are capable of detecting individual photons, capturing low-dimensional visual data (1-bit frames) at very fast speeds (up to 100 kfps). Due to their high light sensitivity and fast acquisition rates, SPADs are ideal candidates for applications requiring real-time decision making under perceptually degraded scenarios such as night drives or high dynamic range imaging. However, the high data volume generated from a SPAD, the restrictive value range and loss of intensity continuity at lower bit depths, and the associated poissonian noise present in low bit-depth frames pose challenges that result in significantly longer data processing times, making SPADs unsuitable for computationally constrained devices. This project explores novel approaches for developing a machine learning model tailored for SPAD-based vision tasks. The goal is to design, implement, and optimize a neural network that significantly reduces the computational demands and memory footprint required for processing SPAD images while maintaining a good-enough performance for real-time imaging and decision making tasks. Keywords: #spad, #quantum, #computer-vision, #machine-learning, #advanced-sensing |

#5 4D Vision: RGB-T Shape Reconstruction for Robotic Perception and Optimal Grasping / Visión 4D: Reconstrucción de Objetos RGB-T para Percepción Robótica y Manipulación Óptima - Available

| Photogrammetry, Neural Radiance Fields (NeRFs), and Gaussian Splatting are surface and volume rendering techniques designed to recreate 3D shapes from 2D image data. In a similar way, methods like Pixel2Mesh have been designed to generate 3D meshes from single color images. In this project, you will survey and test the performance of different existing techniques (traditional and learning-based) for generating 3D shapes from RGB images. Later, the possibility of expanding the chosen technique to account for thermal gradients within the object will be evaluated. The ultimate goal is to design a vision-based policy capable of recreating 4D meshes (3D geometry + temperature gradients) from 2D RGB-T images so that a robotic arm could leverage the generated 4D mesh to recognize and identify different components, define their optimal grasping points and potential faults (mechanical deformations and thermal anomalies) without relying on fiducials or standardized interfaces. Keywords: #computer-vision, #thermal-imaging, #manipulation, #shape-reconstruction, #nerf, #3d-rendering |

#6 Perception-Aware Motion Planning: Integrating Actor-Critic Networks with Optimal Control / Planificación de Movimientos Sensible a la Percepción: Integración de Redes Actor-Critic con Control Óptimo - Available

| In this project, you will improve a Multi-staged Warm started Motion Planner (MWMP) developed in a previous activity with ESA. The goal is to replace the first two stages of the current planner (initial trajectory planning + unconstrained optimization) with a perception-aware actor-critic network trained based on an optimal control driven expert agent via privilege learning. You will first survey the current literature on privilege learning and actor-critic networks and then implement and train a control policy that enables a robotic arm to adapt its motion planner to the position of an object in the environment. The policy’s output is then required to be supervised by optimal control or an MPC solver (constrained optimization). A potential use case for this project is the real-time adaptation of the motion planning and actions of a robot to the location and position of a sample or to off-nominal scenarios, such as misaligned, damaged, or malfunctioning components, based on the pose, location, and dynamics of both the robot and the target object. Keywords: #motion-planning, #machine-learning, #privilege-learning, #mpc, #hybrid-learning |

#7 Advancing single-photon perception for orbital robotics / Avanzando la percepción de fotón-único para la robótica orbital - Unavailable

| In this project, you will explore the benefits and limitations of single-photon imaging for orbital robotic applications, particularly under perceptually degraded conditions such as sun-facing scenarios and eclipses. Your work will start with the annotation of a dataset captured in an orbital-mimicking testbed, comparing images from a conventional monocular camera with those from a novel Single-Photon Avalanche Diode (SPAD) camera. You will assess how SPAD imaging can enhance the efficiency and accuracy of key orbital robotic tasks, with special emphasis on its potential for improving pose/motion estimation. This includes training and refining a SPAD-based CNN pipeline for pose estimation. You will systematically evaluate pose/motion estimation errors under varying frame rates, exposure times, and bit depths. This project is conducted in collaboration with the Computer Vision Lab at École Polytechnique Fédérale de Lausanne (EPFL) in Switzerland. Keywords: #motion-estimation, #pose-estimation, #cnn, #orbital-robotics, #spad |

#8 Teleoperation via ROS2 of a 2-wheel self-balancing robot / Teleoperación vía ROS2 de un robot autoequilibrado de 2 ruedas - Available

| The goal of this project is to be able to remotely operate a 2-wheel self-balancing robot via ROS2. A ROS2 SDK will be provided, which should be implemented on a Raspberry Pi 4B (evaluation of other potential SBCs might be conducted). You will design specific nodes for data acquisition, data visualization, and teleoperation over a local WiFi network. Basic programming skills (Python or C++) are recommended. Prior knowledge of ROS2 is not required. Keywords: #ros, #ros2, #programming, #self-balancing, #teleoperation, #hands-on |

#9 Wheel design for off-road navigation of a 2-wheel self-balancing robot / Diseño de ruedas para navegación todoterreno de un robot autoequilibrado de 2 ruedas - Available

| The goal of this project is to design, build, and test a new wheel design for a robot required to navigate across different types of off-road environments (sand, gravel, grass, etc.). The current 2-wheel self-balancing robot available in our lab uses slick wheels optimized for indoor environments. Your task would be to design and replace these wheels, and perform a quick verification test in a relevant environment. Prior experience in mechanical CAD, 3D modelling, and 3D printing is recommended but not required. Keywords: #mechanical-design, #wheel-design, #self-balancing, #off-road, #cad, #3D-printing, #design |